Welcome to the Sequenced Show, Attend, and Tell Site.

We present Sequenced Show, Attend, and Tell: Natural Language from Natural Images, a machine translation-inspired framework to perform automatic captioning from images. Given an input image of a scene, our algorithm outputs a fully formed sentence explaining the contents and actions of the scene. Our method uses an LSTM-based sequence-to-sequence algorithm with global attention for generating the captions. The input to our algorithm is a set of convolution features extracted from the lower layers of a convolutional neural network, each corresponding to a particular portion of the input image. Following this, using a global attention model, the inputs are used to generate the caption one word at a time with the LSTM “focusing” on a portion of the image as dictated by the attention model.

We compare our proposed method with a number of different methods, including the attention- based method of Xu et al. (2015) as well as the attention-less method of Vinyals et al. (2015). Addi- tionally, we present results both with and without the use of pretrained word embeddings, with the use of different CNNs for feature extraction, the use of reverse ordering of the source input into the LSTM, and the use of residual connections. We find that our proposed method is com- parable with the state of the art. Further, we find that the use of pretrained word embeddings, different CNNs, reversing the ordering of the input, and the use of residual connections do not have a large impact on system performance.

Codebase

This repository contains the necessary code to train and run the Sequenced Show, Attend, and Tell model. The Flickr-8K dataset can be used for training. We suggest using a GPU for training.

Example Results

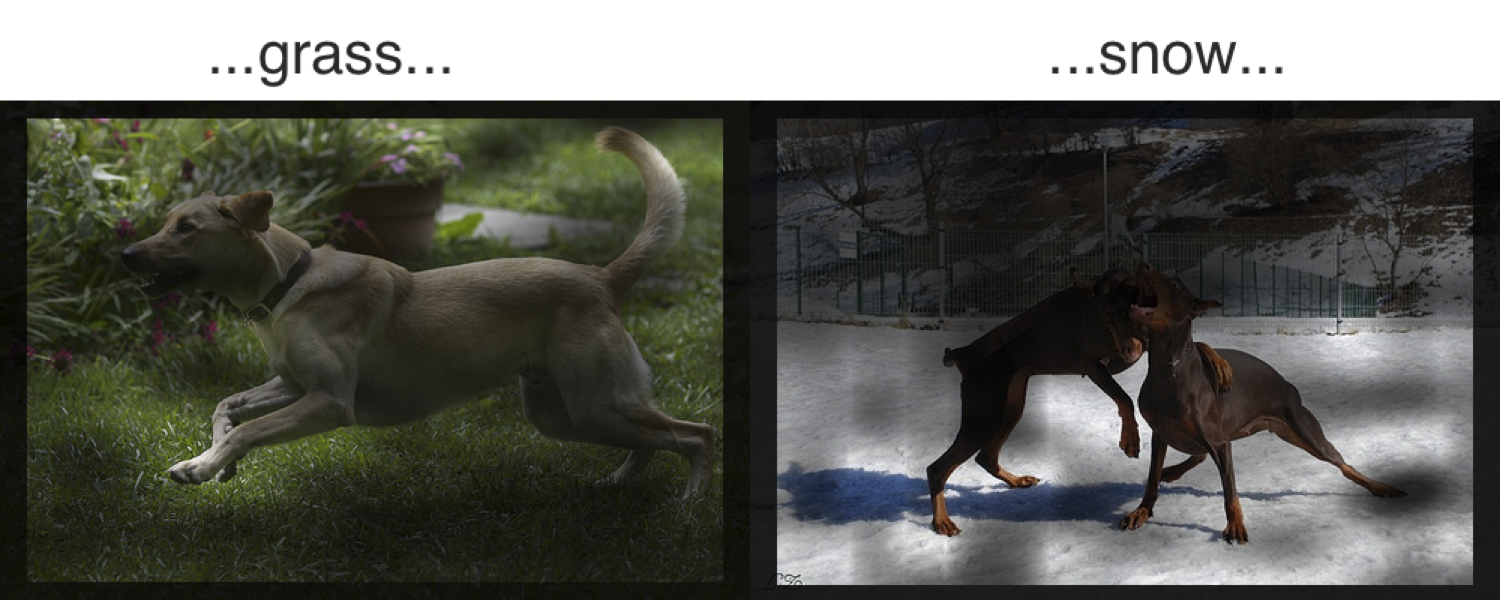

The figure above shows example words from captions generated with our proposed Sequenced Show, Attend, and Tell method, where each word of the caption is presented above a visualization of the areas in which the attention model focuses in generating the word at its given timestep. Note that in both examples, the attention focuses on the grassy field when generating the word “grass” in the caption “A brown dog is running through the grass” and the snowy background when generating the word ”snow” in the caption “Two brown dogs play in the snow.”

The figure above shows example words from captions generated with our proposed Sequenced Show, Attend, and Tell method, where each word of the caption is presented above a visualization of the areas in which the attention model focuses in generating the word at its given timestep. Note that in both examples, the attention focuses on the grassy field when generating the word “grass” in the caption “A brown dog is running through the grass” and the snowy background when generating the word ”snow” in the caption “Two brown dogs play in the snow.”

Example Gallery

A full example gallery can be seen at: http://steerapi.github.io/seq2seq-show-att-tell/flickr8k/pages/index.html

Preprocessed features for the Flickr-8K, Flickr-30K, and Microsoft COCO datasets can be found at https://drive.google.com/folderview?id=0Byyuc5LmNmJPQmJzVE5GOEJOdzQ&usp=sharing